|

Our species has begun to scrute the inscrutable shoggoth! With Matt Freeman

LINKS

Anthropic’s latest AI Safety research paper, on interpretability

Anthropic is hiring

Episode 93 of The Mind Killer

Talkin’ Fallout

VibeCamp

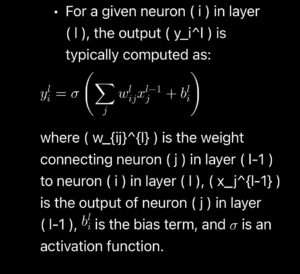

0:00:17 – A Layman’s AI Refresher

0:21:06 – Aligned By Default

0:50:56 – Highlights from Anthropic’s Latest Interpretability Paper

1:26:47 – Guild of the Rose Update

1:29:40 – Going to VibeCamp

1:37:05 – Feedback

1:43:58 – Less Wrong Posts

1:57:30 – Thank the Patron

Our Patreon, or if you prefer Our SubStack

Hey look, we have a discord! What could possibly go wrong?

We now partner with The Guild of the Rose, check them out.

|

More

More

Religion & Spirituality

Religion & Spirituality Education

Education Arts and Design

Arts and Design Health

Health Fashion & Beauty

Fashion & Beauty Government & Organizations

Government & Organizations Kids & family

Kids & family Music

Music News & Politics

News & Politics Science & Medicine

Science & Medicine Society & Culture

Society & Culture Sports & Recreation

Sports & Recreation TV & Film

TV & Film Technology

Technology Philosophy

Philosophy Storytelling

Storytelling Horror and Paranomal

Horror and Paranomal True Crime

True Crime Leisure

Leisure Travel

Travel Fiction

Fiction Crypto

Crypto Marketing

Marketing History

History

.png)

Comedy

Comedy Arts

Arts Games & Hobbies

Games & Hobbies Business

Business Motivation

Motivation